Well folks, I have quite a few little points to mention today. I haven't posted in over a week now because I didn't have enough to talk about. I don't like to write a small blurb every day. I prefer to wait until I have something substantial to write about. With everything that has gone on the last week or so, I think I can throw them all together into one blog post. A sort of mystery stew of sorts.

First off, bug triaging. I have been spending a lot of my time triaging unconfirmed bugs for the Firefox Password Manager. I have been able to get the query of bugs down from 38 to 23 and I expect to drop that number to at least 13 by next week. By my math, the unconfirmed password manager bugs are down 39% after the first week and should drop by 43% by next week. These are very good numbers from where I sit.

Next at bat, Major Update. So Major Update, what is it? Well this is where we offer an update to users running an older version of Firefox to the latest version of Firefox. In this case, for users running Firefox 1.5 to be updated to Firefox 2.0.0.4. So Major Update was supposed to get pushed out last week, but there was a little snag with the CJKT (China, Japan, Korea, Taiwan) locales regarding default homepage and default search engine. Needless to say, the snag has been pretty much tested to death and we are pretty satisfied with the results. We are pretty happy with the product and are confident it will be successful when it is released into the wild soon.

The next major event of the week is the Gran Paradiso Alpha 6 release. There are quite a few improvements and the developers have been working long, hard hours to get it to where it is today. I think everyone is pretty happy with the product as it is today. There is still much work to be done, but for an Alpha, things are looking pretty good. I have been using A6 for a while now and have only run into a couple minor hitches, which were fixed fairly quickly after reporting them. Kudos developers.

On a lighter note, last Friday was the intern hootenanny. There is not much that CAN be said about this event. It was a lot of fun and most of the interns showed up. We talked. We laughed. We played catchphrase. The party got shutdown at 10:30 by the man, so we moved it to the hot tub. Suffice it to say, we had a good time. It was a good icebreaker event for all the interns. I believe that Andrew Stein, marketing intern extraordinaire, took some excellent pictures. If you ask him nicely, he may share them with you.

The final event of the last few days was my aerial tour of the bay area. With Preed piloting and Alex Buchanan along for the ride, we took to the skies over the bay. This was originally supposed to be an aerial tour of San Francisco. Unfortunately, the cloud cover over the west end of the bay was too thick. If we had tried to go to San Francisco, we wouldn't have seen anything. So we opted for a tour of the east bay area (Mountain View, Palo Alto, Redwood City, Livermore, San Jose). Even though I was a little down about not seeing San Francisco, I was not disappointed at all. It was still a nice trip. I was able to snap quite a few pictures, which I added to the flickr set from the time Reed went flying with Preed. You may notice that I renamed the "Preed & Reed's Flight Tour of Pismo Beech" to "Bay Area Aerial View". Since all the photos are pretty much the same area, it made sense for me to just collaborate them into a single set.

Oh, and let me tell you about "engine out procedures". This is basically where one forces the nose of the plane to drop taking the plane with it on a quick decent of a few hundred feet in a few seconds before taking control of the plane back and leveling it off. While this scared the crap out of me, it was a lot of fun. I couldn't put it any more eloquently as Preed: "it is like being on a roller coaster without any of the safety devices." Awesome Preed...just awesome. Hopefully we can get out to do an aerial tour of San Francisco soon. Maybe we will get out and do an aerial sunset tour of the coast as well. If so, expect many more photos to come.

Anyway, that is all for now.

Chimo!

Media:

|

My Flickr -

1355 Photos (Slideshow)

Recently Added: FSOSS 2007 and Raptors Game. Archive: All Hands Team Building Event, Computer History Museum, Intern BBQ, Alcatraz, Napa, San Luis Obispo trip, Santa Cruz trip, Jays game at Giants Stadium, Mountain View tour, Bay Area - Aerial View, Bay 2 Breakers Videos: Bay 2 Breakers: Jonas the Dancing Fox (video) Bay 2 Breakers: Salmon (video) |

Wednesday, June 27, 2007

Wednesday, June 13, 2007

Litmus - An Analytical Approach (Update)

As some of you may already know, I have been working on a spreadsheet to track and log testday results from Litmus. My first release on Google Spreadsheet ha received some excellent feedback and some good suggestions. After deciding to implement these suggestions, I toiled with Google Spreadsheets for a good couple hours trying to get it to do what I want (mainly with charts). The best analogy I have for how that went is that it was like two children sitting in opposite corners of a small room, nose to the wall screaming "YOU DO IT!", "NO, YOU DO IT!", "NO, YOU DO IT!"...

You get the idea.

So I started to chew on ideas of how I could improve on the spreadsheet, without Google, but still allow the spreadsheet to be shared. I eventually settled on Microsoft Excel 2007 as my program of choice for the spreadsheet. I wanted to use Open Office, but found Microsoft Excel 2007 to be much more prepared for the task at hand. Once I had exported my Google spreadsheet to .xls and imported it into Excel, it was just a matter of making the improvements.

Now that I had the spreadsheet set up the way I wanted, I ran into the second dilemma. How am I going to share this as easily as Google does it. My first thought was, "Google must know!". So I googled a bunch of different searches trying to find a way to "share" Excel spreadsheets.

After about an hour of no luck, I remembered that Microsoft Office 2007 allows sharing documents on a network and over the internet through Sharepoint. After a little research, I realized that I would have to set up a Sharepoint server. Something I was not prepared to do. It sounded way to grandiose a thing just to share out one little spreadsheet.

I finally thought to myself, "what if I can convert the spreadsheet into html? People won't be able to modify the spreadsheet, but they will at least be able to get to it and view it easily enough." So I did a little digging through the Excel menus and found that it has a facility that allows me to convert XLS files to "Web Pages".

But wait! This is a Microsoft product. It is probably going to convert it to html and add all kinds of ActiveX crap and thus won't work properly in Firefox.

Much to my surprise, this was not the case. Excel quickly converted my spreadsheet into a bunch of files that made it cross-browser compatible. Each worksheet of the spreadsheet was created in it's own .htm file, all the charts were conveniently converted into .gif and .png images, and excel even kept all my formatting and styling through a .css file.

All that was left was to try it out. Upon loading the main .htm file from my desktop, I noticed that everything was laid out as it was in the excel file. The charts had become a bit degrade, but it is still quite readable. Excel even created tab buttons at the bottom of the screen so that I could navigate from sheet to sheet.

That being successful, I just uploaded the files to the appropriate locations on my people account, which is where it exists now.

For your viewing pleasure:

http://people.mozilla.com/~ahughes/Litmus_Results.htm

While this isn't a perfect solution, at the very least it allows people to view the Litmus Testday results quite easily now. I can also modify the spreadsheet, save to html and easily upload any changes. I can also import the raw data into Google Spreadsheets for collaboration if needed. It just won't have the pretty charts there.

I hope you find it somewhat useful.

You get the idea.

So I started to chew on ideas of how I could improve on the spreadsheet, without Google, but still allow the spreadsheet to be shared. I eventually settled on Microsoft Excel 2007 as my program of choice for the spreadsheet. I wanted to use Open Office, but found Microsoft Excel 2007 to be much more prepared for the task at hand. Once I had exported my Google spreadsheet to .xls and imported it into Excel, it was just a matter of making the improvements.

Now that I had the spreadsheet set up the way I wanted, I ran into the second dilemma. How am I going to share this as easily as Google does it. My first thought was, "Google must know!". So I googled a bunch of different searches trying to find a way to "share" Excel spreadsheets.

After about an hour of no luck, I remembered that Microsoft Office 2007 allows sharing documents on a network and over the internet through Sharepoint. After a little research, I realized that I would have to set up a Sharepoint server. Something I was not prepared to do. It sounded way to grandiose a thing just to share out one little spreadsheet.

I finally thought to myself, "what if I can convert the spreadsheet into html? People won't be able to modify the spreadsheet, but they will at least be able to get to it and view it easily enough." So I did a little digging through the Excel menus and found that it has a facility that allows me to convert XLS files to "Web Pages".

But wait! This is a Microsoft product. It is probably going to convert it to html and add all kinds of ActiveX crap and thus won't work properly in Firefox.

Much to my surprise, this was not the case. Excel quickly converted my spreadsheet into a bunch of files that made it cross-browser compatible. Each worksheet of the spreadsheet was created in it's own .htm file, all the charts were conveniently converted into .gif and .png images, and excel even kept all my formatting and styling through a .css file.

All that was left was to try it out. Upon loading the main .htm file from my desktop, I noticed that everything was laid out as it was in the excel file. The charts had become a bit degrade, but it is still quite readable. Excel even created tab buttons at the bottom of the screen so that I could navigate from sheet to sheet.

That being successful, I just uploaded the files to the appropriate locations on my people account, which is where it exists now.

For your viewing pleasure:

http://people.mozilla.com/~ahughes/Litmus_Results.htm

While this isn't a perfect solution, at the very least it allows people to view the Litmus Testday results quite easily now. I can also modify the spreadsheet, save to html and easily upload any changes. I can also import the raw data into Google Spreadsheets for collaboration if needed. It just won't have the pretty charts there.

I hope you find it somewhat useful.

Giants 3, Blue Jays 2

Last night, Cesar and I were given the opportunity to go see the San Francisco Giants play my Toronto Blue Jays at AT&T Park thanks to a free ticket courtesy of Dan Portillo. Thanks Dan.

Unfortunately, since we took the Caltrain and didn't board until 7:01p, we missed the first 3 innings of the game. Which, coincidentally, would turn out to be the most exciting innings of the game. You see, all of the runs were scored within the first 3 innings.

It wasn't a total loss however. I was able to see AT&T Park, which was a good experience. For anyone who hasn't been inside the walls of AT&T Park, it feels like an old ball park and overlooks the bay. It definitely felt a lot different from watching my Jays play back home in the Skydome. I also was able to snap a few pictures while at the game, which I have posted on my flickr. To see them, follow the link I added at the top of this page.

On a final note, next time I need to dress warmer. A t-shirt, shorts, and cons are not ideal attire for a San Francisco night. As I learned the hard way.

I look forward to the next time I can watch the Jays play the Giants. Hopefully we can do better next time. Go Jays!

Unfortunately, since we took the Caltrain and didn't board until 7:01p, we missed the first 3 innings of the game. Which, coincidentally, would turn out to be the most exciting innings of the game. You see, all of the runs were scored within the first 3 innings.

It wasn't a total loss however. I was able to see AT&T Park, which was a good experience. For anyone who hasn't been inside the walls of AT&T Park, it feels like an old ball park and overlooks the bay. It definitely felt a lot different from watching my Jays play back home in the Skydome. I also was able to snap a few pictures while at the game, which I have posted on my flickr. To see them, follow the link I added at the top of this page.

On a final note, next time I need to dress warmer. A t-shirt, shorts, and cons are not ideal attire for a San Francisco night. As I learned the hard way.

I look forward to the next time I can watch the Jays play the Giants. Hopefully we can do better next time. Go Jays!

Monday, June 11, 2007

Litmus - An Analytical Approach

As promised, I am writing about my experience with Litmus this week and my findings. I have heard mention that there is some worry about the turn-out for this weeks testday. So I decided to go and look at the numbers.

Upon first glance, these feelings seemed to be completely unfounded. Especially since I had completed 197 tests on my own. So I decided to conduct a little research on my own.

I took my time and queried every test day completed since August 2005, in search of the number of testers and the number of results in a given test day. Having found these numbers, I plugged them into a spreadsheet and analyzed the numbers. To make it easier to see, I also created three charts. One for the results of the tests for each testday, another for the number of testers for each test day, and a final chart displaying the ratio of tests complete to testers. This last chart is used to "score" how well that test day went.

Before I go into my findings I would like to show you copies of the charts for your reference:

Number of Results Per Testday

Number of Testers Per Testday

Testday "Score" - Results per Tester

After going over these numbers, I can theorize the following:

It is my hope that this will become a good initial dataset to help with deciding how to draw more people to the testdays.

I think the best line of defense is to get out to the schools more. Not just the colleges and universities either. Perhaps try to get to graduating high school students entering into this field.

As a sidebar, I have a couple ideas for Litmus and Bugzilla that I would like to air.

First off, the test cases in Litmus need to be cleaned up. Often when I run a Litmus test on a specific platform, there will be tests that say "mac only" or "linux only" when I am running windows tests (for example). This means that I either have to go run that test on the other platform so I can mark the test as "pass" just to get my 100% coverage, or I have to leave it as "not run" never achieving 100% coverage.

Secondly, Litmus should be able to recognize multiple build ids for one program. For example, every night there is a nightly of Minefield put out with a different build id. So if I want to continue my tests from last day, I have to fool Litmus into thinking I am still running the old build id. Perhaps we can program a window of build ids into Litmus?

My final idea I had was related to Bugzilla. Is there a way we can implement some kind of duplicate bug detection like Digg's duplicate story submission detection. For those that do not know, when a user submits a story to Digg, it checks to see if that story might already be submitted. I believe this is based on URL and the title and description the user gives for the story. It then returns a list of possible duplicate stories that the user can read to verify that they aren't dupes or submit their story anyway. I know the system isn't perfect, but I believe that we could learn something from this and that it would make Bugzilla better. Both from the end-user perspective and from Bugzilla's maintainers' perspective. I realize that this will not help with our current duped bugs, but it will help as a preventative measure for avoiding future duplicate bugs.

Before I leave you for today, I would like to mention an idea that tchung mentioned to me today. What if testers on Litmus could vote on whether they found a certain test useful or not? Like digg, reddit, delicious, or any of those sites. Sounds kind of interesting to me.

Anyway, that is all for today.

Stay tuned for another post later this week.

Upon first glance, these feelings seemed to be completely unfounded. Especially since I had completed 197 tests on my own. So I decided to conduct a little research on my own.

I took my time and queried every test day completed since August 2005, in search of the number of testers and the number of results in a given test day. Having found these numbers, I plugged them into a spreadsheet and analyzed the numbers. To make it easier to see, I also created three charts. One for the results of the tests for each testday, another for the number of testers for each test day, and a final chart displaying the ratio of tests complete to testers. This last chart is used to "score" how well that test day went.

Before I go into my findings I would like to show you copies of the charts for your reference:

Number of Results Per Testday

Number of Testers Per Testday

Testday "Score" - Results per Tester

After going over these numbers, I can theorize the following:

- Thunderbird tests have always had a low turnout when compared to Firefox tests. Since 2007-06-08 was the first time Firefox and Thunderbird were separated into their own test-days and held during the same time, we are seeing the results more accurately than before.

- Our highest results for number of testers always falls during school semesters, so the fact that school is out in the summer could be contributing to low numbers as well

- It was mentioned that IRC traffic was low during testday. In my opinion, IRC traffic is not a good indicator of a successful test day. A low amount of traffic tells me that there were few issues, which is a good thing.

- Looking at the charts as they stand, it is tough to extract any particular pattern.

- It should be noted that all this does it show that the current situation isnt on a big decline. We should still try to come up with ideas on how to promote the testdays better to get even more exposure.

- The days where we had 30+ user participation were Seneca test days.

It is my hope that this will become a good initial dataset to help with deciding how to draw more people to the testdays.

I think the best line of defense is to get out to the schools more. Not just the colleges and universities either. Perhaps try to get to graduating high school students entering into this field.

As a sidebar, I have a couple ideas for Litmus and Bugzilla that I would like to air.

First off, the test cases in Litmus need to be cleaned up. Often when I run a Litmus test on a specific platform, there will be tests that say "mac only" or "linux only" when I am running windows tests (for example). This means that I either have to go run that test on the other platform so I can mark the test as "pass" just to get my 100% coverage, or I have to leave it as "not run" never achieving 100% coverage.

Secondly, Litmus should be able to recognize multiple build ids for one program. For example, every night there is a nightly of Minefield put out with a different build id. So if I want to continue my tests from last day, I have to fool Litmus into thinking I am still running the old build id. Perhaps we can program a window of build ids into Litmus?

My final idea I had was related to Bugzilla. Is there a way we can implement some kind of duplicate bug detection like Digg's duplicate story submission detection. For those that do not know, when a user submits a story to Digg, it checks to see if that story might already be submitted. I believe this is based on URL and the title and description the user gives for the story. It then returns a list of possible duplicate stories that the user can read to verify that they aren't dupes or submit their story anyway. I know the system isn't perfect, but I believe that we could learn something from this and that it would make Bugzilla better. Both from the end-user perspective and from Bugzilla's maintainers' perspective. I realize that this will not help with our current duped bugs, but it will help as a preventative measure for avoiding future duplicate bugs.

Before I leave you for today, I would like to mention an idea that tchung mentioned to me today. What if testers on Litmus could vote on whether they found a certain test useful or not? Like digg, reddit, delicious, or any of those sites. Sounds kind of interesting to me.

Anyway, that is all for today.

Stay tuned for another post later this week.

Bugs, Litmus, and a Super Fun Testday!

So another week at Mozilla, and a whole new set of interesting things to tell you about. Well, interesting if you like QA...and who doesn't?!

For the majority of the week, I triaged bugs. This was definitely interesting. I am afraid that I am still learning the ropes in bugzilla, but I am getting better. The biggest hurdle was to wrap my head around he meanings of what the different statuses meant. It is one thing to know what the statuses mean, it is another to know how and when to use them. Knowing what situation warrants a particular status comes with time and practice. Don't worry, there is quite a few people on IRC that are watching the bugs and they are fairly expedient in letting you know where you may have erred.

On the plus side, I was able to clean up all the critical and major Password Manager bugs that were sitting around unconfirmed. Quite a few of them were almost two years old! All that is left now are 15 bugs sitting unconfirmed, most of which are enhancements :)

The next major event of the week was this week's testday. I focussed my testing on Minefield 3.0a6 along with a few other participants, and others took on Thunderbird 2.0.0.4 testing. Overall, I think the testing went very well. From what I saw, there were no major problems with either programs. There were a few bugs found, but no show stoppers. Also, I was able to complete 197 tests. I do not know what got into me, but I just couldn't stop. I just kept plugging away, submitting bugs, and before I knew it, it was 5pm and I had completed the test day with 197 tests complete. I was quite surprised.

On the lighter side of life, I would like to highlight a couple items of recreational interest that happened throughout he week.

First off, I was able to get out to the driving range for the first time in a while with a couple people from the office. It was quite relaxing and felt really good to get out and swing my clubs again. Perhaps I will get out on a course sometime soon :)

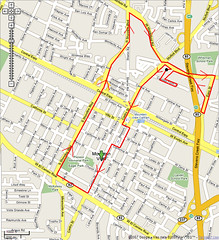

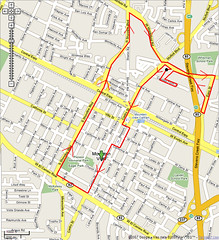

Secondly, on Sunday, I decided to take myself on a quick tour of Mountain View and take some pictures along the way. I walked from the Oakwood apartments, down to the Caltrain station, around downtown Mountain View, down to the ECR, across to Shoreline, and then across Middlefield to Easy street, and finally back to the Oakwood apartments.

My Mountain View Walking Route - Click to Enlarge

For your viewing pleasure, I have created a slideshow of the 77 photos I captured. You can find a link to it in the media section of this page, above this post.

I want to go into more details about Litmus and the testday, but I want to reserve that for it's own post. Stay tuned...

For the majority of the week, I triaged bugs. This was definitely interesting. I am afraid that I am still learning the ropes in bugzilla, but I am getting better. The biggest hurdle was to wrap my head around he meanings of what the different statuses meant. It is one thing to know what the statuses mean, it is another to know how and when to use them. Knowing what situation warrants a particular status comes with time and practice. Don't worry, there is quite a few people on IRC that are watching the bugs and they are fairly expedient in letting you know where you may have erred.

On the plus side, I was able to clean up all the critical and major Password Manager bugs that were sitting around unconfirmed. Quite a few of them were almost two years old! All that is left now are 15 bugs sitting unconfirmed, most of which are enhancements :)

The next major event of the week was this week's testday. I focussed my testing on Minefield 3.0a6 along with a few other participants, and others took on Thunderbird 2.0.0.4 testing. Overall, I think the testing went very well. From what I saw, there were no major problems with either programs. There were a few bugs found, but no show stoppers. Also, I was able to complete 197 tests. I do not know what got into me, but I just couldn't stop. I just kept plugging away, submitting bugs, and before I knew it, it was 5pm and I had completed the test day with 197 tests complete. I was quite surprised.

On the lighter side of life, I would like to highlight a couple items of recreational interest that happened throughout he week.

First off, I was able to get out to the driving range for the first time in a while with a couple people from the office. It was quite relaxing and felt really good to get out and swing my clubs again. Perhaps I will get out on a course sometime soon :)

Secondly, on Sunday, I decided to take myself on a quick tour of Mountain View and take some pictures along the way. I walked from the Oakwood apartments, down to the Caltrain station, around downtown Mountain View, down to the ECR, across to Shoreline, and then across Middlefield to Easy street, and finally back to the Oakwood apartments.

My Mountain View Walking Route - Click to Enlarge

For your viewing pleasure, I have created a slideshow of the 77 photos I captured. You can find a link to it in the media section of this page, above this post.

I want to go into more details about Litmus and the testday, but I want to reserve that for it's own post. Stay tuned...

Thursday, June 7, 2007

Asus $189 Laptop runs Firefox

Asus unveiled their $189 laptop this week at Computex which they say will be available all over the world, not just developing nations. It is running "something that looks like Windows" *cough* linux, "an office system compatible to Microsoft Office"; Open Office anyone? and uses Firefox.

Dubbed the 3ePC, it uses a more conventional interface than the OLPC, comes with a 2GB flash drive and 512MB of ram.

Read the full article here:

http://www.pcpro.co.uk/news/114773/asus-stuns-computex-with-100-laptop.html

Dubbed the 3ePC, it uses a more conventional interface than the OLPC, comes with a 2GB flash drive and 512MB of ram.

Read the full article here:

http://www.pcpro.co.uk/news/114773/asus-stuns-computex-with-100-laptop.html

Monday, June 4, 2007

I choose you AUS2

Last week made for a very interesting and busy week. The high point of the week was getting the next update to Firefox 1.5/2 and Thunderbird 1.5 out the door. There was a lot of testing to be done to make sure that there would be as little negative user impact as possible. A few of us had to work into the night, but the hard work paid off. Ultimately, we were able to get Firefox 1.5.0.12 and Firefox 2.0.0.4 out to the user.

Everything didn't go completely smoothly, as things rarely do in the real world. Once users started getting their updates, we saw a 3-fold increase in bandwidth. We were able to work our way through the bandwidth issues however, and everything turned out pretty well. Once we got over that hurdle it was just a waiting game to see if there were any major problems reported by users. I think we can safely say that the minor update launch was a success.

What is involved in testing Firefox to ensure smooth updates? It is a fairly straight forward process. I will not go into all the details, but it generally involves downloading an older version of Firefox. Then, changing a config file so that when Firefox checks for updates from a test server. Once the update is installed, we ensure the browser is not "broken" by running a few checks.

For those who are curious, there are a couple terms you should be aware of when working on updates for the community. The first is a "partial" update. This is when you are updating from one version to the next version of said software. The second is a "complete" update. This is when you are updating from one version to the latest available version of said software. The third type of update check is known as a "fallback". A fallback is tested by updating to a new version of the software, but instead of accepting the update, we tell Firefox to update later. We then go into a config file and change the update status from "pending" to "failed". This makes Firefox think that an update was unsuccessful and it attempts to update to the latest version. While this process is fairly straightforward, there is a lot of work to do for update testing because Firefox has a lot of different versions, locales, and is in use on many different platforms by our users. But this hard work pays off in the end and gives you a feeling of self-satisfaction once the project is deemed a success.

As soon as we got that project out the door, we immediately switched gears and started working on the next major project. I am quickly learning that in the software business, there is a constant flow of work. As soon as one project is complete, the next project begins, if it hasn't already. This makes for a very interesting career, if you are up to the challenge. I can honestly say that my experience from other industries, mainly the automotive industry, has prepared me for this aspect of the software industry.

The next big project this week was starting some of the testing on Firefox 3, currently dubbed Minefield 3.0 Alpha 5 Pre. The extent of testing Firefox 3 this past week was running Basic Functionality Tests (BFTs) on each of the main platforms. These platforms are Windows XP, Windows Vista, Mac OSX and Linux. Running the BFTs themselves is again fairly straightforward, but ultimately there is a lot of work involved. The BFT series of tests consists of 196 tests ranging from installation to GUI and layout to security checks to software updates. Using a program called Litmus, we can track the progress of these tests. While running these tests, it is not uncommon to come across a bug that is unrelated to the test you are running.

While running the BFTs for Minefield on Friday, I came across five bugs. Some related to the test I was running, some completely unrelated. If you are ever in doubt, ask someone on irc to confirm what you are seeing and then file a bug.

After all of this testing is complete, we use another tool of the trade: the wiki. Using a wiki, we log what tests we ran, on what version, on what platform, who ran the tests, and any problems we noticed along the way. This allows everyone to get a snapshot of where the problems exist and where we were successful.

The third major task of the week was triaging bugs. Using, bugzilla, a tracking system used to log bugs or issues with our software, we go through and double check any bugs that are sitting as "unconfirmed". This allows us to identify any serious problems or items that were isolated incidents and have long since been fixed. This process involves finding bugs in bugzilla that need to be checked, try to reproduce the issue noted in the bug. If you want to get involved in this aspect of QA, I suggest you come to #testday on irc sometime. Be wary, some bugs in bugzilla can be pretty technical. Just seek out the ones that you think you can handle. No one is expected to know everything.

As you can see from my ever expanding post here, that Mozilla had quite a busy week. The way things are looking, there are going to be many busy weeks to come. I think I would be more worried if we weren't busy. So this is definitely a good sign.

On that note, I must bid you adieu.

See you next week!

Everything didn't go completely smoothly, as things rarely do in the real world. Once users started getting their updates, we saw a 3-fold increase in bandwidth. We were able to work our way through the bandwidth issues however, and everything turned out pretty well. Once we got over that hurdle it was just a waiting game to see if there were any major problems reported by users. I think we can safely say that the minor update launch was a success.

What is involved in testing Firefox to ensure smooth updates? It is a fairly straight forward process. I will not go into all the details, but it generally involves downloading an older version of Firefox. Then, changing a config file so that when Firefox checks for updates from a test server. Once the update is installed, we ensure the browser is not "broken" by running a few checks.

For those who are curious, there are a couple terms you should be aware of when working on updates for the community. The first is a "partial" update. This is when you are updating from one version to the next version of said software. The second is a "complete" update. This is when you are updating from one version to the latest available version of said software. The third type of update check is known as a "fallback". A fallback is tested by updating to a new version of the software, but instead of accepting the update, we tell Firefox to update later. We then go into a config file and change the update status from "pending" to "failed". This makes Firefox think that an update was unsuccessful and it attempts to update to the latest version. While this process is fairly straightforward, there is a lot of work to do for update testing because Firefox has a lot of different versions, locales, and is in use on many different platforms by our users. But this hard work pays off in the end and gives you a feeling of self-satisfaction once the project is deemed a success.

As soon as we got that project out the door, we immediately switched gears and started working on the next major project. I am quickly learning that in the software business, there is a constant flow of work. As soon as one project is complete, the next project begins, if it hasn't already. This makes for a very interesting career, if you are up to the challenge. I can honestly say that my experience from other industries, mainly the automotive industry, has prepared me for this aspect of the software industry.

The next big project this week was starting some of the testing on Firefox 3, currently dubbed Minefield 3.0 Alpha 5 Pre. The extent of testing Firefox 3 this past week was running Basic Functionality Tests (BFTs) on each of the main platforms. These platforms are Windows XP, Windows Vista, Mac OSX and Linux. Running the BFTs themselves is again fairly straightforward, but ultimately there is a lot of work involved. The BFT series of tests consists of 196 tests ranging from installation to GUI and layout to security checks to software updates. Using a program called Litmus, we can track the progress of these tests. While running these tests, it is not uncommon to come across a bug that is unrelated to the test you are running.

While running the BFTs for Minefield on Friday, I came across five bugs. Some related to the test I was running, some completely unrelated. If you are ever in doubt, ask someone on irc to confirm what you are seeing and then file a bug.

After all of this testing is complete, we use another tool of the trade: the wiki. Using a wiki, we log what tests we ran, on what version, on what platform, who ran the tests, and any problems we noticed along the way. This allows everyone to get a snapshot of where the problems exist and where we were successful.

The third major task of the week was triaging bugs. Using, bugzilla, a tracking system used to log bugs or issues with our software, we go through and double check any bugs that are sitting as "unconfirmed". This allows us to identify any serious problems or items that were isolated incidents and have long since been fixed. This process involves finding bugs in bugzilla that need to be checked, try to reproduce the issue noted in the bug. If you want to get involved in this aspect of QA, I suggest you come to #testday on irc sometime. Be wary, some bugs in bugzilla can be pretty technical. Just seek out the ones that you think you can handle. No one is expected to know everything.

As you can see from my ever expanding post here, that Mozilla had quite a busy week. The way things are looking, there are going to be many busy weeks to come. I think I would be more worried if we weren't busy. So this is definitely a good sign.

On that note, I must bid you adieu.

See you next week!

Subscribe to:

Posts (Atom)